Key policy considerations for national governments

There’s growing noise around ‘regulating’ AI.1 Some claim it’s too early,2 citing that precautionary regulations could impede technical developments; others call for action,3 advocating measures that could mitigate the risks of AI.

It’s an important problem. And both ends of the debate make compelling arguments. AI applications have the potential to improve output, productivity, and quality of life. Forestalling AI developments that facilitate these advancements are big opportunity costs. Equally, the risks of broad-scope AI applications shouldn’t be dismissed. There are near-term implications, like job displacement and autonomous weapons, and longer-term risks, like values misalignment and the control problem.

Regardless of where one sits on the ‘AI regulation’ spectrum, few would disagree that policymakers should have a firm grasp on the development and implications of AI. It’s unsurprising, given the rapid developments, that most do not.

Asymmetry of Knowledge

Policymakers are still very much at the beginning of learning about AI. The US government held public hearings late last year to ‘survey the current state of AI’.4 Similarly, the UK House of Commons undertook an inquiry to identify AIs’ ‘potential value’ and ‘prospective problems’.5

While these broad inquiries signify positive engagement, they also highlight policymakers’ relatively poor understanding, particularly compared to industry. This is understandable given the majority of AI development and expertise is concentrated in a select few organisations. This handful of organisations (literally, counted on two hands) possess orders of magnitude more data than anyone else. This access to data, coupled with resources and technical expertise, has fueled the rapid and concentrated development of AI.

Governments lacking in-house expertise is problematic from a policy development perspective. As AI increasingly intersects with safety-critical areas of society, governments hold responsibilities to act in the interests of their citizens. But if they don’t have the ability to formulate measured policies in accordance with these interests, then unintended consequences could arise, placing their citizens at risk. Without belabouring scenarios of misguided policies, governments should prioritise building their own expertise. Whether they’re prepared or not, governments are key stakeholders. They hold Social Contracts with their citizens to act on their behalf. So, as AI is applied to safety-critical industries, like healthcare, energy, and transportation, understanding the opportunities and implications is essential.

Ultimately, knowledge and expertise are central to effective policy decisions. And independence helps align policies to the public interest. While the spectrum of potential policy actions for safety-critical AI is broad, all with their own effects, inaction is also a policy position. This is where most governments are at. I think it’s important they rigorously consider the implications of these decisions.

Let me be clear: I’m not advocating for ‘more’ or ‘less’ regulation of AI. I’m advocating for governments to build their capacity to make effective and independent policy decisions. At the moment, few are qualified to do so. That’s a big reason why AI has developed in a policy vacuum.

‘Policy’ rather than ‘Regulation’

The term ‘regulation’ is not helpful in advancing the discourse on the roles of governments with AI. ‘Regulation’ can evoke perceptions of restriction. A heavy hand impeding growth, rather than nourishing progress. This is counterproductive, particularly in these early stages.

More useful, and less abrasive, is the term ‘policy’. Policy simply refers to a set of decisions that societies, through their governments, make about what they do and do not want to permit, and what they do or do not want to encourage.6 Policies can be ‘pro-innovation’, helping to accelerate the development and diffusion of technologies. Policies can also decelerate and redirect technological development and diffusion. Science and Technology policy has a long history of both, and everything in between.

So, the term ‘policy’ is necessarily flexible. It may sound like semantics. But language matters.

Safety-Critical AI

Safety-critical AI refers to autonomous systems whose malfunction or failure can lead to serious consequences.7 This could include adverse environmental effects, loss or severe damage of equipment, harm or serious injury of people, or even death.

While there’s no formal definition of what constitutes ‘safety-critical’, governments already identify sectors considered ‘critical infrastructure’. The US Department of Homeland Security classifies:

“16 critical infrastructure sectors whose assets, systems, and networks, whether physical or virtual, are considered so vital to the United States that their incapacitation or destruction would have a debilitating effect on security, national economic security, national public health or safety, or any combination thereof.”

Similarly, the Australian government (my home country) defines critical infrastructure as:

“… those physical facilities, supply chains, information technologies and communication networks which, if destroyed, degraded or rendered unavailable for an extended period, would significantly impact on the social or economic wellbeing of the nation or affect Australia’s ability to conduct national defence and ensure national security.”8

These include sectors like energy, financial services, and transportation. Scrolling through the list, we can see AI already being applied in all of these sectors.

This as it should be. AI can improve productivity in the critical sectors of society and help us achieve more.

The problem is that AI systems designers still face very real challenges in making AI safe. These challenges are exacerbated, and their importance heightened, when AI’s are applied to safety-critical sectors. The stakes are high.

Concrete Problems in AI Safety

Dario Amodei et al. provide an excellent account of ‘Concrete Problems in AI Safety’. This paper lists five practical research problems related to accidents in machine learning systems (the most dominant subcategory of AI) that may emerge from poor design of real-world AI systems. A summary of the five problems are as follows:9

- Avoiding Negative Side Effects: ensuring that AI systems do not disturb the environment in negative ways while pursuing its goals.

- Avoiding Reward Hacking: ensuring that AI systems do not ‘game’ its reward function by exploiting bugs in its environment and acting in unintended (and potentially harmful) ways to achieve its goal(s).

- Scaleable Oversight: ensuring that AI systems do the right things at scale despite limited information.

- Safe Exploration: ensuring that AI systems don’t make exploratory moves (i.e. try new things) with bad repercussions.

- Robustness to Distributional Shift: ensuring that AI systems recognise, and behave robustly, when in an environment different from its training environment.

In addition to these safety problems from unintentional accidents are the safety problems with AI systems designed to inflict intentional harm. We saw tastes of this during the 2016 US Presidential Election. Russian actors used Twitter and Facebook bots to create and proliferate derogatory claims and ‘Fake News’ about the Clinton campaign. While it appears most of these bots are considered ‘dumb AI’ (for example, programmed only to robotically retweet specific accounts), it’s a firm step towards AI political propaganda. There’s an immediate risk that machine learning techniques will be applied at scale in political campaigns to manipulate public engagement. This automated political mobilisation won’t be concerned with what’s ‘real’ or ‘true’. Its goals are to build followings, change minds, and mobilise votes.

Therefore, the risks of unintentional and intentional AI harm to the public are significant. As representatives of the public, it’s incumbent upon governments to: (a) develop institutional competencies and expertise in AI; and (b) institute measured and appropriate policies, guided by these competencies and expertise, that maximise the public benefits of AI, while minimising the public risks.

Skilling-Up Governments

Improving internal AI competencies within governments is a recommendation that regularly arises.10 For reasons aforementioned, it’s broadly agreed to be an important step. A key challenge is how to attract and retain talent.

If governments (particularly Western governments) are to develop internal AI expertise, they’ll inevitably compete for talent with the likes of Google and Facebook. These companies build their technical outfit by offering enormous remuneration, lots of autonomy, great working conditions, and the social status of working for a company ‘changing the world’.

Working for the Department of Social Services for salary doesn’t quite have the same ring to it.

It’s also a function of social mood. Nationalism is on the rise,11 trust in institutions has collapsed,12 and the internet has afforded more opportunities for flexible and independent labour than any point in history. These cultural dynamics affect how governments operate and what people demand from them.

Of course, it’s possible for governments to stir inspiration and coordinate talent towards hard, technical goals. After all, we did ‘choose to go to the moon’. But the audacious and inspirational plans of the 1960’s, encouraged by a culture of definite optimism, feels a far cry from the incremental plans and rising fog of pessimism that weighs on many governments today.

For problems as hard as AI policy, attracting the best and brightest is a crucial, yet formidable task. This is especially difficult for the roles at, or below, middle-management.

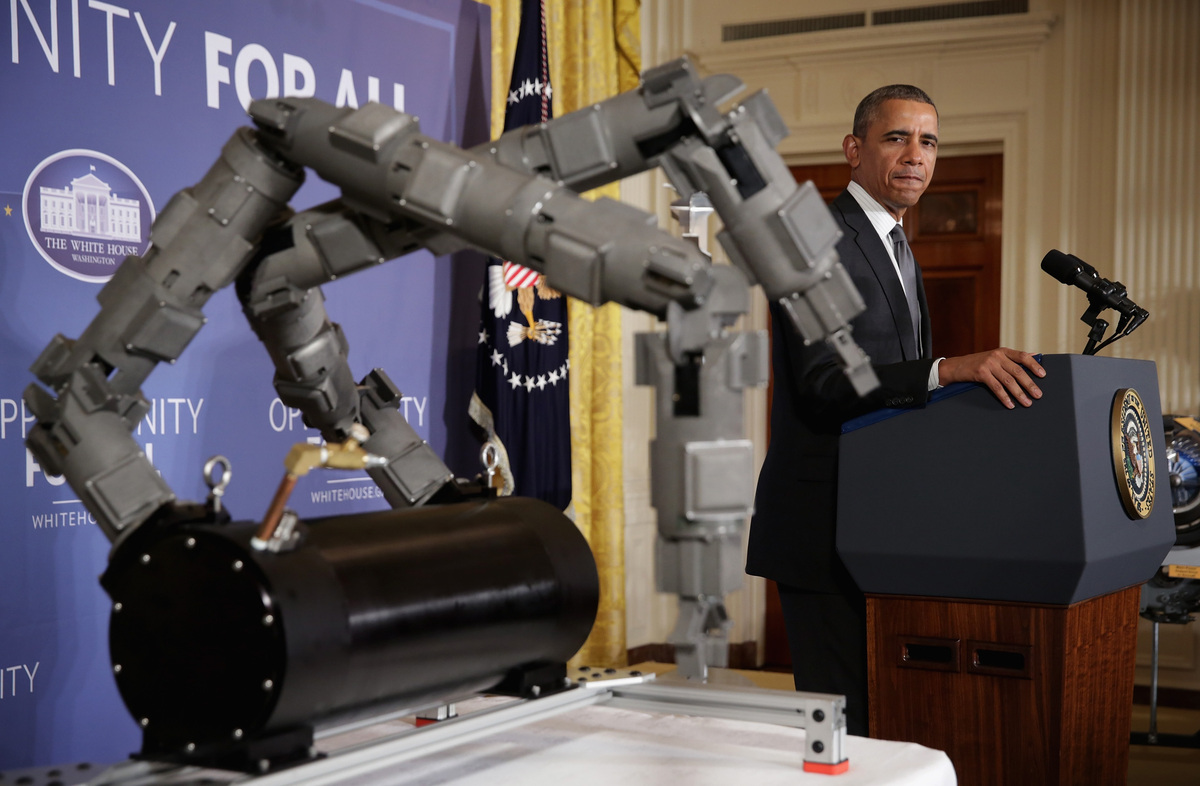

Governments are experimenting with ways to build technical talent. And there are some interesting initiatives happening in the periphery. For instance, the Obama Administration introduced the Presidential Innovation Fellows program. Fellows serve for 12 months as embedded entrepreneurs-in-residence to help build the technological capacities of government agencies. The program attracts talent from the most prominent tech firms for ‘tours of duty’. They’re given resources and support to help work on important technical projects under the auspices of the Federal Government.

While positive, secondments won’t suffice for building AI competencies within governments. As the applications of AI are so broad, affecting so many safety-critical areas, governments have a responsibility to be prepared. At this stage, preparation involves understanding the unique opportunities and implications of AI. For many governments, it’s not clear who is responsible for this task and where expertise should reside.

This issue has led researchers, such as Ryan Calo13 and Matt Scherer14, to recommend the establishment of government agencies specifically for AI & Robotics.

The case for AI & Robotics Agencies

Efforts to address AI & Robotics policy decisions have been piecemeal, at best. However, as AI is increasingly scaled across sectors, Calo argues that the diffusion of expertise across existing agencies and departments makes less sense.15 A more centralised agency would provide a repository of expertise to advise and formulate policies recommended for governments.

Why an Agency?

In the context of AI, Scherer outlines the benefits and appropriateness of administrative agencies, stating:16

- Flexibility – Agencies can be ‘tailor-made’ for a specific industry or particular social problem;

- Specialisation & Expertise – Policymakers in agencies can be experts in their field rather than the more generalist roles required by courts and legislatures;

- Independence & Autonomy – Agencies have more latitude to conduct independent factual investigations that serve as a basis for their policy decisions; and

- Ex Ante Action – Similar to legislatures, agencies have the ability to formulate policy before harmful conduct occurs.

What would an AI Agency do?

Views on the potential roles of AI & Robotics agencies vary. They’re also necessarily country-specific. The key point of conjecture surrounds the degree of enforceability that an agency would assume.

Calo’s view is that a Federal Agency should act, at least initially, as an internal repository of expertise. A standalone entity tasked ‘with the purpose of fostering, learning about, and advising upon’ the impacts of AI & Robotics on society.17 This would help cultivate a deep appreciation of the technologies underlying AI. And governments will have unconstrained access to independent advice on the development trends, deployment progress, and inevitable risks that AI actually presents. If the risks develop such that stronger safety regulations are necessary, then an agency is in place to formulate these policies.

Both Scherer and Calo agree that an agency would provide an increasingly important resource for governments. However, Scherer proposes that a US AI Federal Agency should assume a more proactive role, sooner rather than later. The regulatory framework put forth would be based on a voluntary AI certification process, which would be managed by the agency.18 AI systems that are certified enjoy limited liability, whereas uncertified AI systems would assume full liability. (To learn more about it, read my blog article summarising the research or read Scherer’s paper)

Despite these differing views on the scope of roles, the advent of new agencies in response to new technologies is not new. Radio, aviation, and space travel all resulted in the creation of new agencies across many nations. As these safety-critical technologies, and others, grew in capability and prominence, governments foresaw their opportunities and impacts, opting for dedicated agencies of expertise. Of course, the scope and responsibilities of different agencies vary, and some have been more effective than others. Therefore, an important research task for AI policy development is to assess the design, scope, and implementation of previous safety-critical government agencies, pulling out key lessons that might apply to AI & Robotics. (I intend to write more on this subject in forthcoming blog posts)

What makes ‘effective AI Policy’?

This is perhaps the central question of AI policy. It speaks to the criteria that should be used to determine the merits of safety-critical AI policies. Specifically, what constitutes effective policies in safety-critical AI? How will we know they’re effective?

The development of such criteria is a necessarily interdisciplinary task. It requires thoughtful input from a diversity of stakeholders and careful consideration of any recommendations. For the recommendation of any authoritative criteria assessing the effectiveness of AI policies could influence government actions. As Peter Drucker said: “What gets measured gets managed”.

The development of robust criteria needs to both sufficiently assess near-term policies but also provide insight into the projected longer-term impacts. It also needs to cater for the host of policies currently in place that directly, or indirectly, affect AI. These include de facto policies such as privacy laws, intellectual property, and government research & development investment.19 While the scholarship in AI policy is thin, there have been some ideas put forth to advance research discussions.

Near-term Criteria

In their seminal research survey, the Future of Life Institute published ‘A survey of research questions for robust and beneficial AI’. In this survey, the authors proposed the following points for consideration:20

- Verifiability of compliance – How governments will know that any rules or policies are being adequately followed

- Enforceability – Ability of governments to institute rules or policies, and maintain accountability

- Ability to reduce AI risk

- Ability to avoid stifling desirable technology development and have other negative consequences

- What happens when governments ban or restrict certain kinds of technological development?

- What happens when a certain kind of technological development is banned or restricted in one country but not in other countries where technological development sees heavy investment?

- Adoptability – How well received the policies are from key stakeholders (the prospects of adoption increase when policy benefits those whose support is needed for implementation and when its merits can be effectively explained to decision-makers and opinion leaders)

- Ability to adapt over time to changing circumstances

These policy criteria points share similarities with other safety-critical technologies, such as nuclear weapons, nanotechnology, and aviation. So, there are definitely lessons from the design and management of previous Science & Technology policies. A core challenge, however, is for policymakers to apply these lessons appropriately, recognise the unique challenges of AI, and develop policy responses accordingly. To do so effectively, will require a considered eye on the longer-term implications of any policy decisions.

Long-term Criteria

Professor Nick Bostrom et al. from the Future of Humanity Institute provides an overview of some key long-term policy considerations in their working paper: ‘Policy Desiderata in the Development of Machine Superintelligence’. This paper focuses on policy considerations for Artificial General Intelligence (AGI), which are considered ‘unique’ to AGI or ‘unusual’.

The paper distils the following desiderata:21

- Expeditious progress – Ensure that AI development and the path with a high probability to superintelligence is speedy. Socially beneficial products and applications are made widely available in a timely fashion.

- AI safety – Techniques are developed to make it possible to ensure that advanced AIs act as intended.

- Conditional stablisation (kill switch) – The ability to temporarily or permanently stablise the AI to avert catastrophe.

- Non-turbulence (social stability) – The path avoids excessive efficiency losses from chaos and conflict. Political systems maintain stability and order, adapt successfully to change, and mitigate socially disruptive impacts.

- Universal benefit – All humans who are alive at the transition of AGI get some share of the benefit, in compensation for the risk externality to which they were exposed.

- Magnanimity (altruism and compassion) – A wide range of resource-satiable values (ones to which there is little objection aside from cost-based considerations), are realized if and when it becomes possible to do so using a minute fraction of total resources. This may encompass basic welfare provisions and income guarantees to all human individuals.

- Continuity (fair resource allocation) – (i) maintain order and provide the institutional stability needed for actors to benefit from opportunities for trade behind the current veil of ignorance, including social safety nets; and (ii) prevent concentration and permutation from radically exceeding the levels implicit in the current social contract (basically, gross and growing resource inequality).

- Mind crime prevention – AI is governed in such a way that maltreatment of sentient digital minds is avoided or minimised.

- Population policy – Procreative choices, concerning what new beings bring into existence, are made in a coordinated manner and with sufficient foresight to avoid unwanted Malthusian dynamics and political erosion. (For e.g. what happens to population policy if humans become economically unproductive beings and governments are no longer incentivised to support them?)

- Responsibility and wisdom – The seminal applications of advanced AI are shaped by an agency (individual or distributed) that has an expansive sense of responsibility and the practical wisdom to see what needs to be done in radically unfamiliar circumstances.

As the authors stress, these summarised criteria points aren’t ‘the answer’. Rather, they’re ideas to be built upon. What is clear, however, is that given the development speeds of AI, any near-term policies will need to closely consider its longer-term implications. For as the capacities of intelligent systems continue to compound, so too will their impacts. Therefore, policy decisions, whether deliberately or not, will affect the development of AI and its deployment throughout society. Establishing criteria to assess these policy decisions will help ensure that AI is safe and beneficial to humanity.

Conclusion

As AI becomes more pervasive, questions of policy will intensify. While shrouded in complexity, policymakers can help ensure the safe passage of AI that’s beneficial to humanity. As the representatives of the public, they have a responsibility to be informed and involved.

I hope this essay on some of the key issues and arguments in AI policy was helpful. I’d love any feedback. Feel free to get in touch either by commenting below or send me an email via: nik@bitsandatoms.co.

- For example, see: Oren Etzioni, ‘How to Regulate Artificial Intelligence’, New York Times (1 Sept 2017).

- Ahmed, K (2015) “Google’s Demis Hassabis – misuse of artificial intelligence ‘could do harm’“, BBC News.

- Scherer, Matthew U. (2015) “Regulating artificial intelligence systems: Risks, challenges, competencies, and strategies.” Harvard Journal of Technology and Law.

- US National Science & Technology Committee on Technology (2016) “Preparing for the Future of Artificial Intelligence.” Executive Office of the President.

- UK House of Commons (2016) “Robotics and Artificial Intelligence – United Kingdom Parliament.”

- Brundage, Miles, and Joanna Bryson, (2016) “Smart Policies for Artificial Intelligence.” arXiv, pg. 2.

- Nusser, Sebastian, (2009) “Robust Learning in Safety-Related Domains : machine learning methods for solving safety-related application problems”, OAI.

- Australia New Zealand Counter Terrorism Committee, (2015) “National Guidelines for Protecting Critical Infrastructure from Terrorism”, pg. 3.

- Amodei, Dario, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané (2016) “Concrete Problems in AI Safety.” arXiv.

- For example, see: Calo, Ryan (2014) “The Case for a Federal Robotics Commission.” Brookings; Scherer, Matthew U. (2015) “Regulating artificial intelligence systems: Risks, challenges, competencies, and strategies.” Harvard Journal of Technology and Law; UK House of Commons (2016) “Robotics and Artificial Intelligence – United Kingdom Parliament.”

- Onder, Harun (2016) The age factor and rising nationalism, Brookings Institution.

- “2017 Edelman TRUST BAROMETER.” 2017. Edelman.

- Calo, Ryan (2014) “The Case for a Federal Robotics Commission.” Brookings.

- Supra note 3.

- Supra note 12.

- Supra note 3, pg. 381.

- Supra note 12. NB: While Calo refers only to Robotics in this publication, his position has been expanded to AI more generally. For example, see this article.

- Supra note 3.

- Supra note 6.

- Future of Life Institute (2016) “A Survey of Research Questions for Robust and Beneficial AI.”

- Bostrom, Nick, Allan Dafoe, and Carrick Flynn. (2016) “Policy Desiderata in the Development of Machine Superintelligence.” Future of Humanity Institute.